Unraid Server Build 2018

/This is essentially the unRAID do everything build. This is my unRAID NAS server, Plex Media server, Unifi controller, Unifi Video, PiHole, and virtualization system. I’ve really put a lot of eggs into one basket with this build but sometimes it’s just very convenient to have a system that can do it all. With proper backups, there really shouldn’t be much to lose here, well maybe except a lot of time in the event everything fails. Nothing about this build speaks cost effective, budget, or cheap. However, if you manage to read all the way through, you might learn about some things you never considered before. Also, remember that many of these components were added over the course of 2 years!

The Build

Let’s get straight down to business here. Normally, I would highly recommend buying a used R710 (or equivalent) off of eBay or maybe perhaps shopping at orangecomputers.com or savemyserver.com, to save a few bucks and get a nice system. However, for this build, I just wanted something straight up custom and quiet (no mods required). Unfortunately, I would lose out on features like redundant power (due to price), iDRAC 6/7/8 (but would still have IPMI), Oh you sly dog, you got me monologuing!

First we list the parts, then discuss why those parts. Remember, I did this over time, not all at once.

AsRock EP2C602-4L/D16 ($280)

Dual LGA 2011 2690’s (eBay $350)

4GB Kingston ECC KVR1333D3E9S/4G (x16 $250 total)

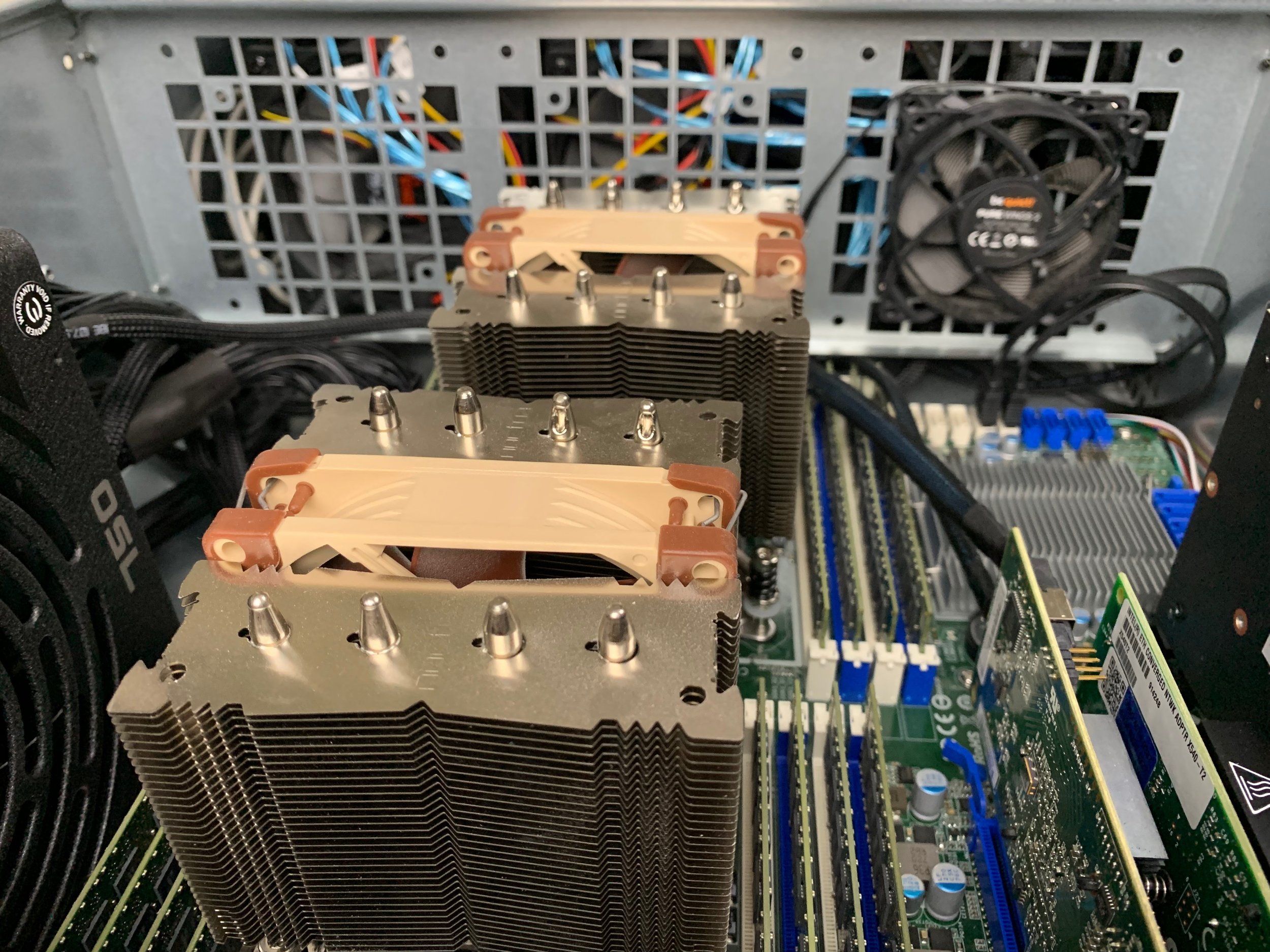

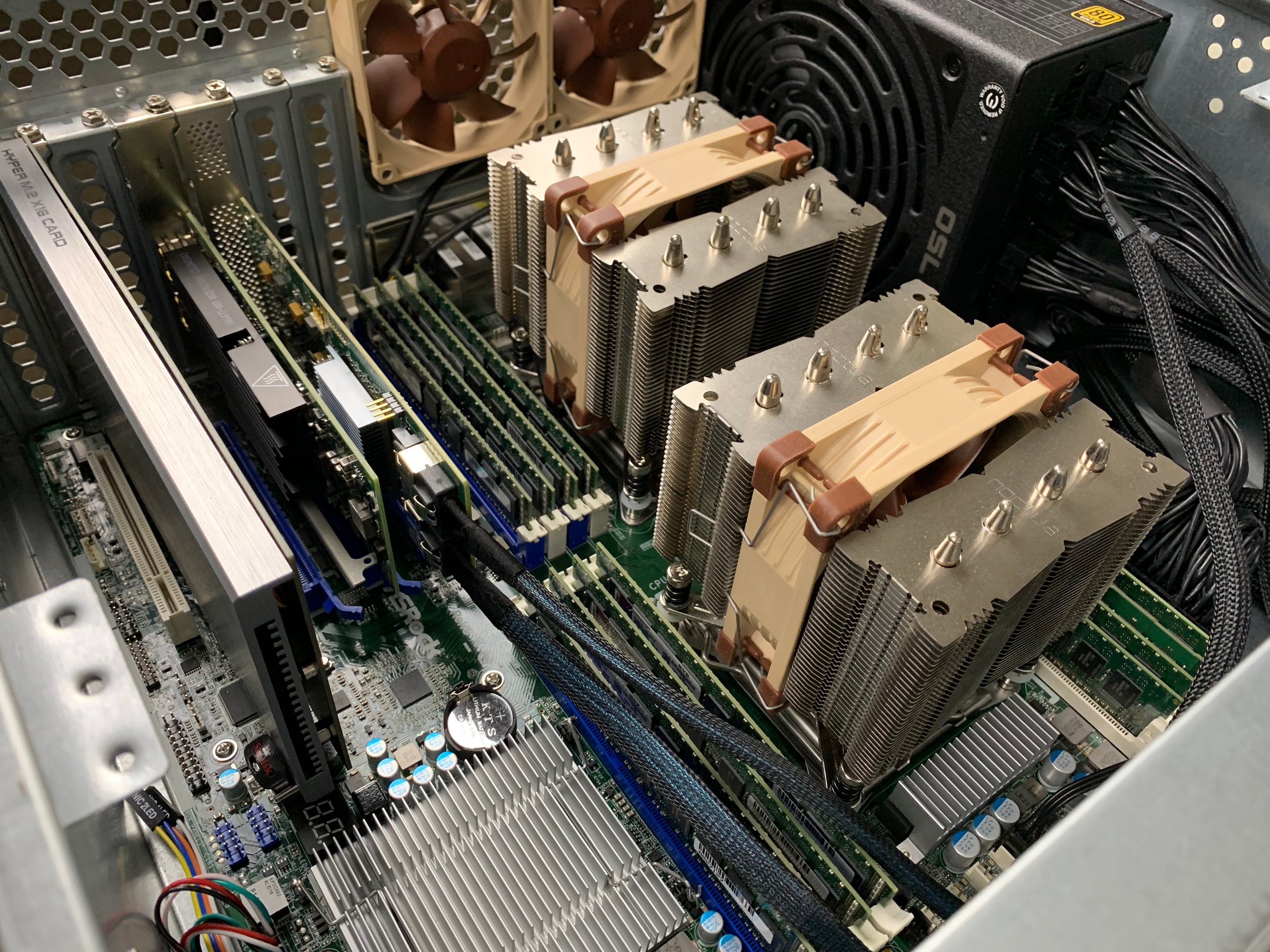

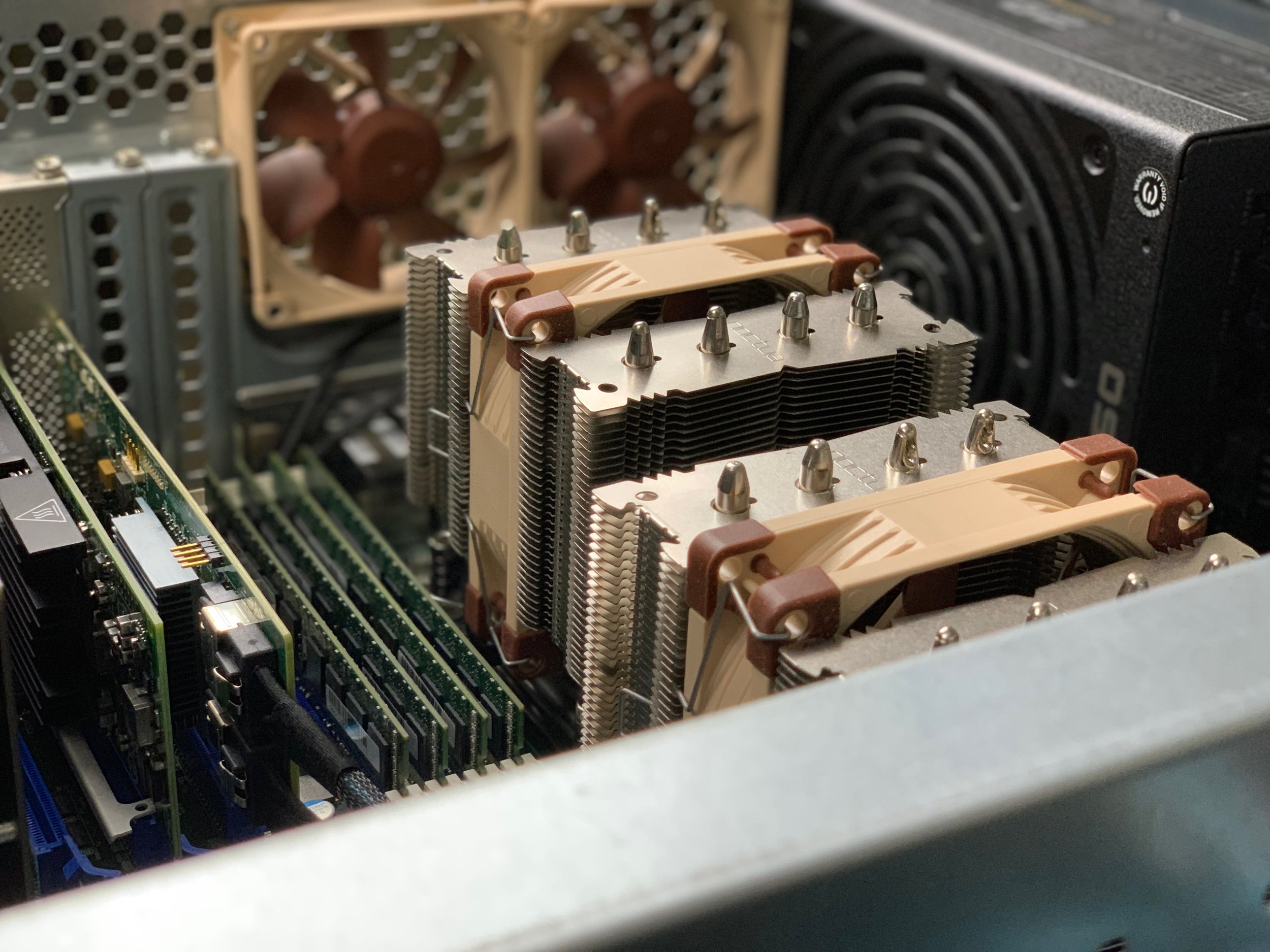

Noctua NH-D9DX i4 3U (x2 $55)

Rosewill RSV-L4500 or Rosewill RSV-L4412 ($81)

NavePoint Rails ($25)

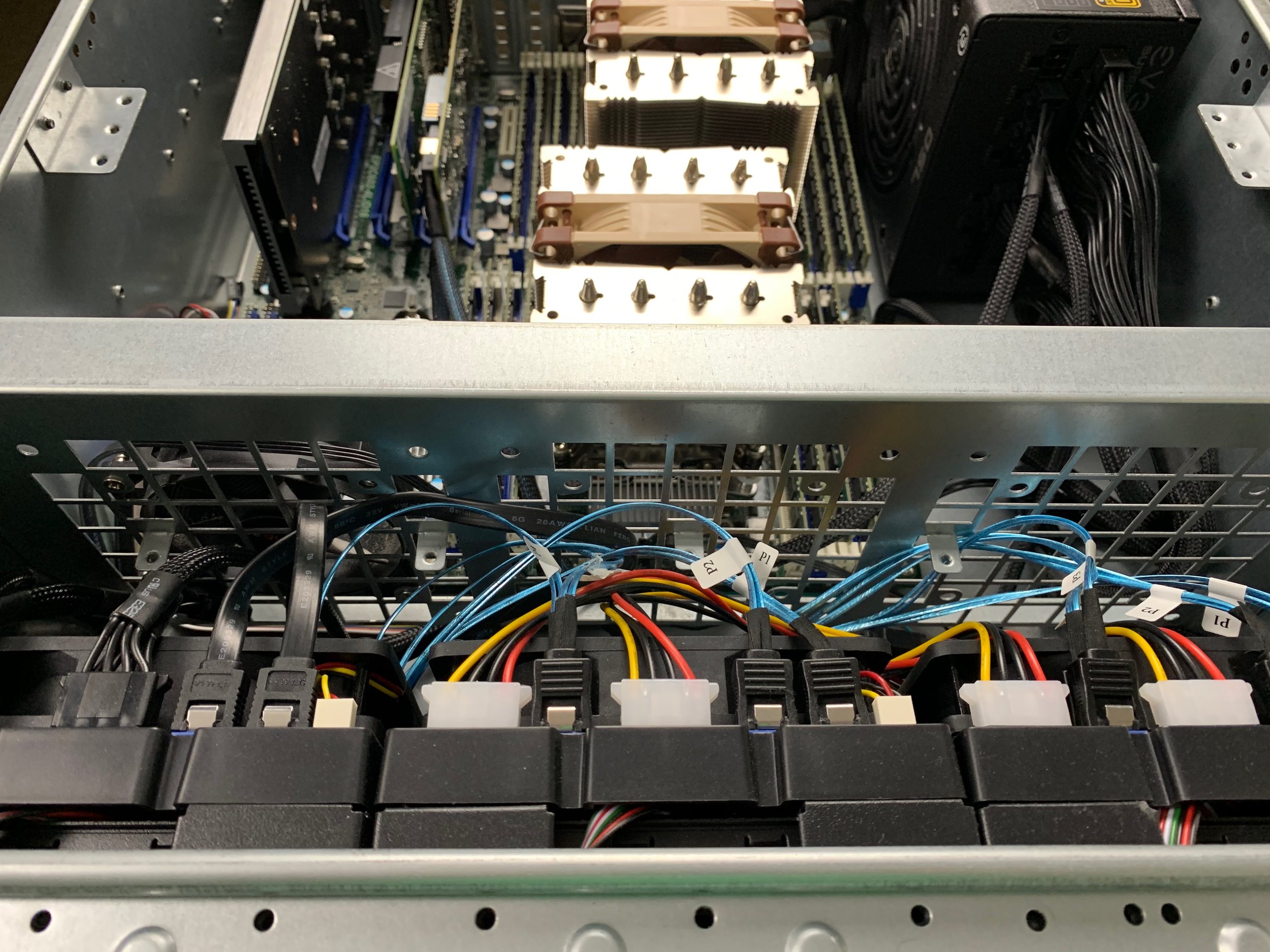

3.5 Hot-swap Drive Bays ($50)

6" SATA 15-Pin Male to Dual 4-Pin Molex Female Y Splitter (x3 $4)

SAS9211-8I ($82)

SFF-8087 to SATA Forward Breakout (x2 $12 each)

EVGA SuperNOVA 750 G2 ($120)

SanDisk Ultra Fit 16GB for unRAID OS ($6)

Toshiba thnsn51t02duk 1TB NVME ($150)

Asus Hyper M.2 x16 ($60)

Intel x540 T2 ($100)

WD Red Drives

Noctua NF-A9 PWM (x2 $15 each)

Be Quiet! 120mm PWM ($19)

iStarUSA 15u Server Rack ($150)

Total Cost approximately $1595

The Why

The Motherboard

I specifically chose the AsRock EP2C602-4L/D16 because I specifically wanted LGA 2011 2670 or 2690s, these CPUs are insanely badass and very affordable when purchased used off eBay. Secondly, this motherboard is on sale seemingly, every other week on NewEgg so you too may be able to get it on the cheap.. I have only had one problem with this motherboard and that is the onboard Marvell SATA controller. unRAID does not like Marvell, my drives would spew mad errors. The only solution, and not a bad one at that, was to purchase a LSI SAS9211-8I card. This worked wonders for me. One perk of this board is the dedicated IPMI port, aka, remote access via the network.

IMPI (Intelligent Platform Management Interface), is always on and allows you to manage the server even when it is powered off. Because of the Web UI, you can also see cool statistics about your hardware, like temperature and speeds. You will also be able to update the BIOS/UEFI if needed or access log files for diagnostic reasons. Cool to have! Now, full disclosure. I have maybe used IPMI all of two times. Much easier to turn the server on with Magic Packets(Wake-on-Lan WOL) than to go to the web URL for the remote management.

Thirdly? I knew I wanted to, at the very least, have a dual socket motherboard so that way I would be able to support dual CPUs. Having two CPU’s would give me many extra cores and threads for several VMs or docker containers. The board also has a pretty decent amount of memory slots (16 total), which allows you to cheap out a bit on 4GB DDR3 DIMMs instead of 8GB DIMMs. Just something to consider, well unless you want to go ham and get 16GB or 32GB DIMMs. I haven’t confirmed this but I believe the most RAM this board will support is 128GBs of unregistered ECC memory and 512GBs of registered ECC memory. Something to keep in mind.

The board also has on-board Intel quad 1Gbps ethernet ports, which is perfect for redundancy or pass-through. Another great thing about the AsRock EP2C602-4L/D16, is that it supports bifurcation. Which is what I needed since I wanted to split a PCI-E x16 lane into two x8s for my NVME SSDs.

Lastly, this board has an on-board USB 2.0 slot, which is perfect for the SanDisk Ultra Fit 16GB that stores the unRAID OS itself. The reason this is a perk is because I never have to worry about the USB drive being unplugged while the system is running. Be warned though, the USB port is very close to the PCI-E lanes, so if you get a thumb drive that is too tall, it might interfere with the PCI card you are trying to insert into the system.

You should note, that you can sometimes get the EP2C602-4L/D16 cheaper on NewEgg than Amazon, especially when it’s on sale. I managed to get this board for $280 off Amazon. Pretty expensive on it’s own, especially when you consider you can get a Dell R720 with dual 2670’s, redundant 750watt PSU’s, and 16GBs of RAM for around $560.

Central Processing Units- CPUs

Originally, I purchased Dual LGA 2011-v1’s 2670’s (SR0KX) for $118, total, not each. Which was a great deal. Then somewhere along the line I decided to get a second server, the second server ended up with the 2670s and this build ended up with Dual 2690s. I got the Dual 2690s for a whopping $350!!!! For me this was a worth-while upgrade because there are times when I have 2 to 4 people streaming from my Plex server at the same time. The system is also host to a Space Engineers server, which at times can use the hell out of the CPU’s. The 2670’s were great for a long time, almost 2 years in fact, but overtime I added more and more services to this system, so it was time for a CPU bump. If you are looking to save a few bucks, stick with the 2670’s, they are beasts.

The 2670’s are 8 Cores/16Threads with a base clock speed of 2.6Ghz and Turbo Boost speed of 3.3Ghz, as well as a peak power usage of 115 Watts. The 2690’s are in a very similar boat also with 8 Cores/16 Threads but with a base clock speed of 2.9Ghz and boost clock of 3.8 giggly hurtz. The 2690’s however each electricity like it’s going out of style, they are capable of using up to 135 watts each! That is some serious power draw. Thankfully, they spend more time at idle than at full load. Phew….

Quick benchmark scores from PassMark:

Multithreaded score - 18197

Single threaded score - 1625

Multithreaded score - 19727

Single threaded score - 1811

Part of the reason I bumped up to the 2690’s was for better single threaded performance, Space Engineers will easily hit 95%~100% utilization on a single core, so better clocks will help churn through all that data.

Noctua LGA 2011 CPU Heatsinks

These heatsinks fit on square and narrow ILM LGA 2011 sockets. I mention this because some of the servers I have had in the past have narror ILM sockets and finding decent heatsinks for them is troublesome. The only downside with these, is, they are made for 3u and up server chassis. They will not fit in 2u server chassis. There isn’t much to say on these, they are quiet as hell and do a great job of keeping the temps down. 11 out 10 would recommend.

RAM - Kingston ECC

I specifically chose the 4GB Kingston ECC KVR1333D3E9S/4G because a rep at AsRock Rack recommended it for this board. It’s well, RAM. It performs as you would expect… I am actively looking at replacing the RAM with some Samsung 8GB DDR3 1600Mhz RAM; model M393B1G70EB0-YK0. This RAM is compatible with the AsRock EP2C602-4L/D16 board and is readily available all over eBay. Why you ask? Transcoding in Plex and Handbrake can eat up RAM. There have been a few times the entire unRAID server has locked up due to all of the RAM being utilized by Docker apps. So moving from 4GB sticks to 8GB sticks will be very very helpful, we will move from 64GBs to 128GBs. If you are reading this to get ideas for a personal build, I think my honest recommendation would be, just buy the most and cheapest RAM you can.

Server Chassis

Originally, I had purchased the Rosewill RSV-L4500 to do a rack mounted gaming PC build. After some…. challenges, I decided to drop that project. Which, if you are curious, can read about here. So, after the change in projects, I ended up outfitting the RSV-L4500 with these 3.5 Hotswap Drive Bays. These “hot-swap” bays allowed me make room for 12 hard drives that would be more easily accessible, in the event of a hard drive failure or if I needed to add storage in the future, all while keeping the server powered and racked. The 3.5 Bays also use Molex connections for power, so if you don’t have a PSU that has Molex you may need these adapters. The 3.5 hot-swap bays work well enough despite feeling like very cheap plastic Now, by default, you can use the RSV-L4500 for 12 hard drives but they are not easily accessible. You will have to remove the top panel of the server chassis to access the drives. An alternative to doing what I did, would be to just buy the fully loaded Rosewill RSV-L4412.

The server chassis itself, in my opinion is perfect for what it is. Price is great and quality is on par with the price. As far as rails go, I have no clue which sliding rails work with the RSV-L4500. I ended up getting these instead for about $30 buckaroos. The rails work well enough and honestly, I hardly ever de-rack the server for maintenance so I’m not too worried about not having sliding rails. But, I did de-rack it for pictures to add to this post :D.

Power Supply

I bought an EVGA SuperNOVA 750 G2 mainly because of the price and because it had cables/ports to supply two CPUs with power. Remember, the AsRock board has two CPU power inputs, so it is very important we have a PSU that can deliver power to both CPUs. I suppose another reason I chose to buy the EVGA PSU is because it’s a brand that I have grown to trust over the years and have yet to have a single thing from them fail or be problematic. More about the power supply itself, 750 watts should be just enough for the two 2690’s at full load and a little extra on top. Hell, at one point I had an EVGA GTX 1050 in the server and things ran fine. Never had any power issues. I really wanted redundant power but buying a redundant PSU can be close to $500, which is too rich for my blood. Redundant power alone pretty much seals the deal on getting a used R710/720/820 or really any used server. I’m not really sure what else needs to be said but the PSU is always worth a mention (in my mind at the very least).

LSI RAID/HBA card

Initially, I had planned to use the on-board SATA ports with the AsRock Motherboard but this gave me so many problems because of the Marvell SATA controller, you can read more about this here. So because of those problems I chose to purchase the SAS9211-8I mainly because of it’s low price. Is this the best card? Probably not. Is there a better card? Probably. Which card is better? No idea. I got this card because after very brief research it was one that was often recommended for cheap, quick, and easy use. The SAS9211-8I only supports up to 8 drives, which might be a set back for this server because I have 12 bays. However, I have no friggin clue when or I will reach the point when I will fill 8 bays. Probably by the time I fill the 8 bays, 8 or 10 terabyte hard drives will be so cheap, I’ll just replace failing drives with larger ones. Hell, I might just buy up a bunch of 10TB EasyStores and be solid for years to come, more on this later. The point is, this card is more than sufficient for my build and was a easy place for me to save a buck. It worked with unRAID right out of the box, I didn’t have to “flash it to IT mode” or anything. It works with my 4TB ~ 10TB drives no problem.

As you may have noticed this card has a mini-sas port or SFF-8087 (Small Form Factor), so because of that port you may need to buy two of these cables SFF-8087 to SATA Forward Breakout, so you can connect all 8 drives to one card. In the future if I really ever needed I could buy a second one but at this point and unless I get into some serious Data Hoarding, which I highly doubt because I lack the mental aptitude, I seriously doubt I will purchase a second card.

This card may not be ideal because it doesn’t have a battery back up or on board RAM. But I think both of those needs are outside of my scope. I understand it can be detrimental to lose power suddenly then a bunch of data gets corrupted but I’m not running a RAID and with every second day parity checks, I have little to lose, or so I think I do. Plus with backups of everything that is important, I’m really not worried at this point in time. Having a better card just doesn’t fit my needs or wants right now. I would highly recommend doing research about this, I’m not very knowledgeable about RAID cards to be clear. Also, the server is connected to a UPS, so I should be covered in the event of a power failure.

lsi card in the top PCI-E lane with two break out cables

Hard Drives, SSDs, and NVME drives

This is where the server has evolved most for me. Originally, I was using two 240GB Intel 540 SSDs as cache drives for unRAID. However, as time went on, I began transferring larger and larger files to the server and my transfer speeds would drop from 110MB/s to 30 maybe 45MB/s if I was lucky. After a solid year of dealing with LONG transfer times it was sorely needed to upgrade to something faster/better. This is where my “Road to 10Gbe Networking” started.

Essentially I needed to do a couple things to achieve faster transfer speeds in unRAID. The first was to upgrade to 10Gbe and NVME SSDs. SATA SSDs worked great but anytime the file was larger than 20GBs my speeds would plummet to 30MB/s. This would even happen after “Trimming” and completely emptying the SSDs so they had 99% free space. A very long time ago I bought two Toshiba thnsn51t02duk 1TB NVME SSDs for $150 each off of Craigslist. These drives would become the cache drives I use today. Well technically just a single drive because I think one of them is failing. So no RAID 1 cache storage for me. </3

Now, as you may have noticed, the AsRock EP2C602-4L/D16 does not have on board M.2 slot but that doesn’t mean we can’t take advantage of NVME storage. Thanks the gods of old that engineers developed a way to allow us to have PCI-E based storage. Using the Asus Hyper M.2 x16, we are now able to use both NVME M.2 drives in this build. Now, it is very important to note, that in order to use 2 or more drives in a single PCI-E x16 lane, your motherboard must support bifurcation. What is bifurcation you ask? It is essentially the ability for a PCI-E lane to be split into multiple smaller “lanes”. So in this case, in the BIOS of the AsRock motherboard, I changed my PCI-E x16 lane to be split into x8 x8. This allows me to use both NVME drives at the same time. Without this feature/function, unRAID would only be able to see one drive at a time. I have no clue if a Dell R720 supports this, I suppose it would but I have never seen it in the BIOS nor iDRAC settings.

Now the second most important part to avoid slow transfers, is well, a 10 Gigabit NIC. I went with a Genuine Intel x540 T2, how do I know it is genuine, well it has this fancy sticker on it that allows me to look up the trace code on Intels website. Intel calls this sticker a “YottaMark”, with those codes, you are literally able to verify that the NIC is in fact from Intel. This is very important! You can use the Amazon x540 T2 for your build because there are some people who claimed these NICs work fine for them with unRAID. But, I personally have not verified this. Just be wary while shopping on eBay or well, the internet in general. Remember, no YottaMark more than likely means it is not genuine. Sorry for that bit of a tangent, let’s now discuss why I got a 10 Giggly bit NIC.

LSI SAS9211-8i

Intel x540-t2

ASUS Hyper m.2 pcie nvme card

The 10Gigabit NIC is important because we need to transfer as much data as possible and as quickly as possible before the “cache” on the Toshiba NVME drive is filled. If the cache on the NVME drive gets full, write speeds will drop to 300ish Mbps or something to that tune. Basically, if the caches’ cache fills up, you start writing directly to the NVME drive and your speed drops to whatever the write speed is of the drive. Sometimes this work can be offloaded into the RAM of your server, which really helps. I have no idea if unRAID supports this but if it does having “too much” RAM isn’t always a bad thing. This is what I believe is happening, I could be totally wrong. I’m just guessing here. The only thing I can say for certain is, the NVME SSD allows me to transfer 20+GB files for an easy minute or two before write speeds drops to around 300MB/s.

For the meat of my storage I have been sticking to WD Red drives 4TB and 6TB because I trust WD and they can be had for the cheap, when they go on hella sale. I have purchased the 4TB WD Red drives for as low as $114. Currently, unRAID is setup to have dual parity, so both the 5TB and 6TB drives are set as parity and are totally unusable for storage. This leaves me with two 5TB drives and single 4TB drive to store whatever I please on them. I stick to Western Digital because for me, their drives tend to last forever and the one time a drive did fail, Western Digital replaced for free. In fact, the 6TB drive is what Western Digital replaced my 5TB Red drive with, when it failed. If you are really looking to save a buck and maximize your storage capacity, you should consider buying WD EasyStores and Element drives off of Amazon or Best Buy. The 8TB and 10TB drives go on sale regularly, the only catch is, you have to “shuck” aka remove the drives from their housing and have the possibility of having a 3.3Volt issue. For home storage, the “white label” drives are more than adequate. This is a rabbit hole on it’s own, you can read more about this here or on Reddit.

A “shucked” WD 10TB Easystore

Extra Fans

I really only added the Noctua NF-A9 fans because I already had them laying around from a previous build that I did many years ago. Figured it would be worth adding them in the rear to help exhaust some air out of the case.

Now the Be Quiet! fan I intentionally added to push air over the LSI SAS9211 card and the Intel X540 T2 because those two components get hot and do not have any direct air movement over them. Theoretically, this should help prevent thermal throttling for when the cards get too hot.

Roles, Services, Function, and IWHBYD

Wow, where to start… So I think like most people, I wanted to run a media server that had plenty room for growth and storage. After seeing unRAID for the first time and learning just how easy it was to add storage to it in the future, I was immediately sold. I bought the Pro edition almost immediately after getting Plex set up. Unraid is incredibly flexible and great for beginners like me and also more experienced people alike. So, I guess let’s break down each “category” if you will, similar to what I did above. We will start with the OS.

unRAID

This OS was recommended by people on Reddit for it’s ease of use, expand-ability, usability, and great community support. With that in mind and knowing full well I wanted a media server, I took the plunge. Almost immediately I was in love. Any questions I had were answered by easy to follow videos or forum posts. It was definitely the right choice for me, despite never trying FreeNAS or other direct competitors.

What makes this OS more appealing than let’s say Windows, is that I can run Plex or other apps as a Docker versus installing the application in a virtual machine. Using containers truly does give the application direct access to bare metal hardware and gives these apps a nice fresh breath of air. They load faster and work more quickly than if they were on a virtual machine (VM). When I initially started playing with Plex, I used it in a Windows VM on Windows Server 2012 R2, which was pretty good for a while but trying to play around with constantly mounting network shares was getting annoying. With unRAID this was eliminated pretty quickly. Also, if a Docker doesn’t exist for an application I want or need, unRAID uses RedHats KVM, which allows us to spin up virtual machines for any operating system on demand. I have used unRAID to host Hackintosh VM’s in the past, which to me, is truly impressive.

First and foremost, unRAID makes a great, easy to use NAS (network attached storage) device. It supports NFS, SMB, and AFPS; so setting up a file share is a cake walk. You can create users within unRAID to access these shares with relative ease. LimeTech did an amazing job with the WebUI, it’s become very simple to use. If you are having problems or have questions about specific items within unRAID, there is a great community who will help you find an answer pretty quickly. I just wanted to take a moment and list some notable apps you could use with the built in Docker system.

Community Apps (This is a must have, it helps you search for apps created by the unRAID community)

Plex

Deluge

OpenVPN

PiHole

Sonarr

Radarr

CouchPotato

Home-Automation-Bridge (For things like Amazon Echo)

NGINX (can also use lets encrypt)

Minecraft Server

Starbound Server

TeamSpeak and Mumble

FoldingAtHome

Steam Cache Server

Unifi and Unifi Video

I could probably talk about unRAID forever but I’m going to try and keep it short. This is the OS of my choice and I would recommend to anyone looking for a simple solution to common desires and leaves room for you to easily begin exploring more difficult desires and challenges. So how about we just talk about some of the applications and VMs I use within unRAID, maybe it will help you for future planning.

Plex

Well first up is Plex, originally I took the easy way out and was using Windows Server 2012 R2 with Plex installed on it. This worked well for a while but when I needed to upgrade my storage capacity, things got a bit difficult, and I lost a ton of data. I needed more physical storage space and I needed a server that would allow me grow with relative ease. But because I’m a Windows noob I set myself up for failure and lost a ton of media when transferring over to the new server. Still to this day, I’m not entirely sure what happened but thankfully I had a backup of my media. So after doing some research and based off some recommendations, I was lead to unRAID.

The key thing to remember about Plex is your CPU score. You’re CPU score will determine how many quality streams you can support.

Plex Recommends the following:

4K HDR (50Mbps, 10-bit HEVC) file: 17000 PassMark score (being transcoded to 10Mbps 1080p)

4K SDR (40Mbps, 8-bit HEVC) file: 12000 PassMark score (being transcoded to 10Mbps 1080p)

1080p (10Mbps, H.264) file: 2000 PassMark score

720p (4Mbps, H.264) file: 1500 PassMark score

Remember this is a score that is assuming a single stream! So, if you are like me and have up to 7 potential simultaneous streams, this is important to know. The Dual LGA 2011 2670’s have a multi-threaded PassMark score of 18197 and the Dual 2690s have a PassMark score of 19727, which means both of these sets of CPUs are more than capable of handling a few 1080p streams at the same time. As time has gone on, I have started to expand my 4K HDR library and so far it has been a great experience. However, this is one local network stream, if I had 6 other people trying to stream that same 4K video, someones 720p transcode is going to take a hit.

I was recently forced to move to a wireless media system at home because my living room is too far from my server and then all the way down some stairs. There is no way I can run Cat5e or Cat6 cables to that TV. Well, there is no in-expensive way at the very least. I’m using an Apple 4K TV as my local primary client and currently have a Linksys WRT1900AC acting as a Wireless Access Point. Occasionally, I might have some dips in quality on 4K HDR movies but otherwise, there are zero issues. I love Plex.

Unifi and Unifi Video

Originally I was using Unifi and Unifi Video in a docker and it worked great. However, as I began to experiment more with Unifi, I found that the docker app was lacking. Lacking in the sense that if I needed to swap to a newer or older version of the software, I was unable to do it. I was stuck at whatever version the creator of the docker had used. This was quite the bummer. So, in an effort to better support my ever changing and explorative demands, I switched to using an Ubuntu Server VM as a host for Unifi and Unifi VIdeo. This has worked surprisingly well for almost 2 years now. Ubuntu Server is perfect for the Unifi software. When the camera records video, all of that video is directly written to an NFS share that uses the cache within unRAID. This way I don’t have to worry about poor quality in the recordings and the video files will be transferred to the array every night. Using an NFS share within Ubuntu Server is very simple and more practical than creating a VM with “extra” space for video files that I would then need to manage/move with a script or some good ol’ fashion elbow grease.

I legitimately don’t know what else needs to be said about this… It has run flawlessly since I’ve been running on Ubuntu. Oh maybe VM specs? Nothing fancy here, I allocated 2 cores and 4GB’s of RAM. I don’t think I needed to give the VM that much RAM but what the heck. I have plenty to go around and it isn’t going to hurt anything.

Handbrake

I’m using Handbrake with unRAID to automatically re-encode video for my YouTube channel. I’ve set up Handbrake with watch folders so that when I drop new Media into the watch folder, it automatically does the re-encoding for me. Then when I’m ready I can pull down video files to be worked on. This essentially came about because Final Cut Pro X does not support HVEC/H265 video. So all the video that I capture from my camera, needs to be converted into a format that FCP supports.

Handbrake will use ALL CPU cores and threads that are available to do the encoding. So if you really wanted to stress out your system, Handbrake is a great way to do it. Handbrake can also be useful for changing 4K HDR content to 1080p content if your hardware isn’t quite up to the challenge of 4K Plex streaming. Now remember, I never even considered the idea that I would need to do encoding jobs on my server, so having those nice 2670s and 2690s has become very useful. Really, lucked out here.

If you are curious, there is this video to see it all go down. Please note, this is my test server in action and not “Transcencia”.

OpenVPN

I use OpenVPN to connect to my server when I travel, this way I have 24/7 access to all my files and content. So, if I need to re-encode some media I can do it or if I need to do some server maintenance, I can do it all remotely. OpenVPN is a great compliment to have if you are a frequent traveller. Connecting to a sketchy WiFi hotspot at a coffee shop or hotel? Connect to your VPN and have all of your traffic encrypted. This can also be useful if the hotel blocks ports that Mumble, TeamSpeak, or Discord use. This way you can still game or chat with your online buddies, if you are into that sort of thing. This has been great to have and it too was something I never even truly thought I would need. It has come in handy several times.

PiHole

I use a PiHole docker container in unRAID to block ads on my entire home network. It feels like ads have gotten way worse. Some YouTube videos will have several ads in a 15 Minute video. It is just insane. Before I used to be all about installing browser extensions to block ads but now I have devices in my network that do not support ad blocking. So using PiHole is crucial to stop ads that are 5 or 10 minutes long. Hell it’s getting to the point were you can’t even skip a 30 second long ad.

In order to use PiHole with Unifi, you have to tell Unifi that PiHole will be the DNS for your local network. If you do not do this, you will have a bad time. Hell at one point I completely lost internet connectivity and local connectivity. After a bit of a struggle and some online assistance, I managed to get it working and OoooOh Boy, is it magical.

PiHole was just another docker container that I never thought I would need or use. But as time went on, I realized having it would be amazing. Again, I got really lucky that I had the extra horse power to support this endeavour.

Virtualization

I’ve had a myriad of different VMs within unRAID. The most notable of which is a Hackintosh VM. It’s surprising how something so complicated became much easier when you don’t have to worry about kext files or guides that are either out of date or the original creator of the guide just disappeared. I’ve attempted several Hackintosh builds over the years and only 1 was ever successful and that successful one was a VM and not really a build. I have tried a Hackintosh VM on a LGA 2011-v3 5960x build before with ZERO success. I even went as far as exporting a working VM image to a different unRAID OS, making sure to update the cores and mounting points, and the damn VM still wouldn’t boot on a 5960x… So frustrating.

I’ve also ran a Bitcoin Full Node as a VM. Sometimes not even just one but several. This was really just for fun because I wanted to see how many nodes I could have running at once. This project ended up failing because for some reason the database would always get corrupted after running a few days. I doubt it is an OS problem or storage problem, because I passed-through entire hard drives. I never really got to the bottom of it but I ended up dropping the project all together.

Every now and again I will spin up a VM to mess around with Folding At Home(FAH). I literally mean mess around. The VM performance isn’t really that useful for FAH but none the less, that doesn’t stop me. Using a VM restricts the number of cores FAH has access to, so I don’t have to worry about over heating the CPU’s. There really isn’t much to be said about FAH because it’s been about a year since I last played around with a VM. My server gets a heck of a lot of usage now so having available cores and threads plus RAM is becoming more and more important.

Every now and again, when a new OS releases I will spin up a VM to check it out. For instance, I played around a bit with Red Hat 7.0 and Windows Server 2016. Sometime here soon, I will check out Windows Server 2019 too. Now this is solely just out of curiosity more than anything, I really enjoy playing around with new OSes even if I don’t use them for anything. If the OS runs well or there are new features that are cool enough, than I will probably install the OS on one of my extra servers that are laying around.

In the rare case where unRAID doesn’t have a container and you lack the skills to make one (like me), you can always spin up a VM with relative ease. Having the ability to create VMs is amazing. It can really save your arse if you are about to be on a pickle. Hell, let’s say you wanted a system that could just do it all, unRAID could be the host OS for you. You could have a VM for gaming and get near identical performance as a bare metal installation. All the while you are gaming, you have a plex server running for friends or family, as well as that sweet network storage. You can really get a lot of versatility here.

IWHBYD

In truth, this “build” was more of an evolution. I originally had purchased a Quanta S210-X22RQ, 192GBs of RAM, and two 2670’s, which in total was just shy of $800 bucks. The Quanta server had dual 1100 Watt power supplies and all the bells and whistles one could want. However, that thing was louder than jet engine being inside your ear canal. I did do some modifications to quiet it down and it was an awesome server at the time but between poor BIOS and driver support from the OEM and reduced cooling capacity, it was really hurting me when it was pushed to the limit. I ended up selling the Quanta S210-X22RQ for $500, with some RAM and CPU’s, which I then turned around and used for my custom build. I’ve named my custom server Transcencia, after a Starbase from a game called Sins of a Solar Empire. Transcencia felt like the perfect name because it is a big 4u server with tons of power and vast amounts of superiority over anything else I have ever owned, much like the starbase in the game.

This server went from being a simple media server to ultimate unRAID build over time. Which is great because, now for example, I have begun looking at ways to improve my quality of life at home, e.g blocking all ads on my home network. Also, because of the 4u chassis I am able to cram all sorts of PCI-E devices into the server and never worry about heating issues because of it’s size. With a normal desktop PSU, I can stick in a full size graphics card into the server AND power the dang thing. Used Dell or HP servers typically don’t have PCI-E cables to power PCI-E devices, which really sucks if you are trying to have a powerful graphics card in your server. Also, if you stick in a PCI-E device, the server isn’t going to freak out and spin the fans at full speed because the PCI-E device is not an “approved/supported” device. I’m looking at you Dell.

You are lucky now that 1TB NVME SSDs are cheaper than ever. So having that as a cache is reasonable (IMO). If NVME drives are still to rich for you blood, then you have the advantage of getting very cheap and amazing SATA 1TB SSDs for close to $100 bucks. This was nearly impossible 3 years ago. I’ve spent $580 on two 480GB SSDs 4 years ago and those SSDs are not nearly as fast or awesome as more modern SATA SSDs.

Now, let’s say you are not looking for the ultimate build today but could see yourself getting there in the future, then you should definitely consider building a custom server similar to mine. If that doesn’t sound like you and you are just in it for simplicity, you can totally save money by buying a Dell R720 with two 2670s, 32GBs of RAM, PERC H310, a Dell Intel X540-T2, iDRAC 7 Enterprise, dual 750 watt PSUs, and a rail kit for about $750 bucks (at the time of writing). This will be WAY more than enough for a media server and give you plenty of extra horse power for multiple streams. Hell you can even save more money if you find one with less RAM, no iDRAC Enterprise, no 10Gbit card, and 1 PSU. Ebay has some slick deals for bargain hunters. I’m sure the most savvy of us can probably get a way better deal but I ain’t got time for that. Going custom with a 4u chassis, leaves me room for experimentation and growth, which is way up my alley. At the end of the day, I think it really comes down to what you expect to do. If, I had to do it all over again, I would have started with a custom build.